The post Taming the Beast: A Developer’s Deep Dive into SFCC Meta Tag Rules appeared first on The Rhino Inquisitor.

]]>At some point in your Salesforce B2C Commerce Cloud career, you’ve been handed The Spreadsheet. It’s a glorious, terrifying document, often with 10,000+ rows, meticulously crafted by an SEO team. Each row represents a product, and each column contains the perfect, unique meta title and description destined to win the favour of the Google gods. Your heart sinks. You see visions of tedious data imports, endless validation, and the inevitable late-night fire drill when someone screams, “The staging data doesn’t match production!”.

Most of us have glanced at the “Page Meta Tag Rules” section in Business Manager, shrugged, and moved on to what we consider ‘real’ code. That’s a mistake. This isn’t just another BM module for merchandisers to tinker with; it’s a declarative engine for automating one of the most tedious and error-prone parts of e-commerce SEO. It’s a strategic asset for developers to build scalable, maintainable, and SEO-friendly sites.

This guide will dissect this powerful feature from a developer’s perspective. We’ll tame this beast by exploring its unique syntax, demystifying the “gotchas” of its inheritance model, and outlining advanced strategies for PDPs, PLPs, and even those tricky Page Designer pages. By the end, you’ll know how to leverage this tool to make your life easier and your SEO team happier, all without accidentally nuking their hard work.

The Anatomy of a Rule: Beyond the Business Manager UI

The first mental hurdle to clear is that Meta Tag Rules are not an imperative script. They are a declarative system. You are not writing code that executes line by line. Instead, you are defining a set of instructions—a recipe—that the platform’s engine interprets to generate a string of text. This distinction is fundamental because it dictates how these rules are built, tested, and debugged.

It’s a specialised, declarative Domain-Specific Language (DSL), not a general-purpose scripting environment like Demandware Script. This explains why you can’t just call arbitrary script APIs from within a rule and why the error feedback is limited. It’s about defining what you want the output to be and letting the platform’s engine figure out how to generate it.

The Three Pillars of Rule Creation

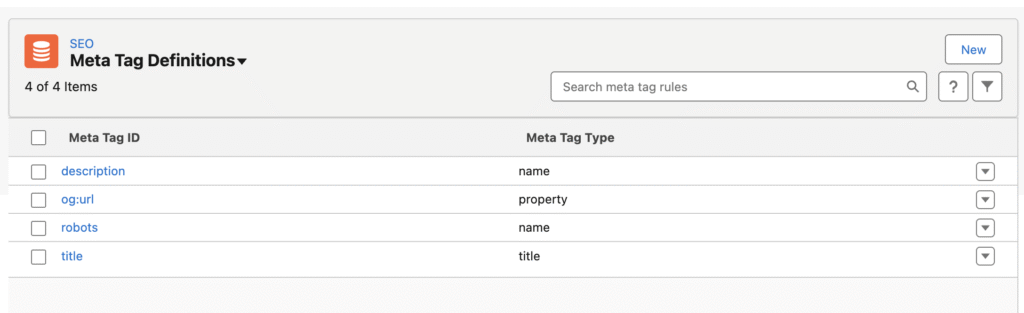

The process of creating a rule within Business Manager at Merchant Tools > SEO > Page Meta Tag Rules can be broken down into three logical steps :

Meta Tag Definitions (The "What")

This is where you define the type of HTML tag you intend to create. Think of it as defining the schema for your output. You specify the Meta Tag Type (e.g., name, property, or title for the <title> tag) and the Meta Tag ID (e.g., description, keywords, og:title). For a standard meta description, the Type would be name and the ID would be description, which corresponds to <meta name="description"...>.

Rule Creation & Scopes (The "How" and "Where")

This is the core logic. You create a new rule, give it a name, and associate it with one of the Meta Tag IDs you just defined. Critically, you must select a Scope. The scope (e.g., Product, Category/PLP, Content Detail/CDP) is the context in which the rule is evaluated. It determines which platform objects and attributes are available to your rule’s syntax.

For example, the Product object is available in the Product scope, but not in the Content Listing Page scope.

Assignments (The "Who")

Once a rule is defined, you must assign it to a part of your site. You can assign a rule to an entire catalog, a specific category and its children, or a content folder. This assignment triggers the platform to use your rule for the designated pages.

The Syntax Cheat Sheet: Your Rosetta Stone

The rule engine has its own unique syntax, which is essential to master. All dynamic logic must be wrapped in ${...}.

Accessing Object Attributes: The most common action is pulling data directly from platform objects. The syntax is straightforward:

Product.name,Category.displayName,Content.ID, orSite.httpHostName. You can access both system and custom attributes, though some data types like HTML, Date, and Image are not supported.Static Text with

Constant(): To include a fixed string within a dynamic expression, you must use theConstant()function, such asConstant('Shop now at '). This is vital for constructing readable sentences.

Mastering Conditional Logic

The real power of the engine lies in its conditional logic. This is what allows for the creation of intelligent, fallback-driven rules.

The IF/THEN/ELSE Structure: This is the workhorse of the rule engine. It allows you to check for a condition and provide different outputs accordingly.

The Mighty

ELSE(The Hybrid Enabler): TheELSEoperator is the key to creating a “hybrid” approach that respects manual data entry. A rule like${Product.pageTitle ELSE Product.name}first checks for a value in the manually-enteredpageTitleattribute. If, and only if, that field is empty, it falls back to using the product’s name. This single technique is the most important for preventing conflicts between automated rules and manual overrides by merchandisers.Combining with

ANDandOR: These operators allow for more complex logic.ANDrequires both expressions to be true, whileORrequires only one. They also support an optional delimiter, likeAND(' | '), which elegantly joins two strings with a separator, but only if both strings exist. This prevents stray separators in your output.Equality with

EQ: For direct value comparisons, use theEQoperator. This is particularly useful for logic involving pricing, for instance, to check if a product has a price range (ProductPrice.min EQ ProductPrice.max) or a single price.

The Cascade: Understanding Inheritance, Precedence, and the Hybrid Approach

The Meta Tag Rules engine was designed with the “Don’t Repeat Yourself” (DRY) principle in mind. The inheritance model, or cascade, allows you to define a rule once at a high level, such as the root of your storefront catalog, and have it automatically apply to all child categories and products. This is incredibly efficient, but only if you understand the strict, non-negotiable lookup order the platform uses to find the right rule for a given page.

I’m not going to go into much detail here, as a complete fallback system is documented.

The Golden Rule: Building Hybrid-Ready Rules

The most common and damaging pitfall is the “Accidental Override.” Imagine a merchandiser spends days crafting the perfect, keyword-rich pageTitle for a key product. A developer then deploys a seemingly helpful rule like ${Product.name} assigned to the whole catalog. Because the rule is found and applied, it will silently overwrite the merchandiser’s manual work.

This isn’t just a technical problem; it’s a failure of process and collaboration. The platform’s inheritance model and conditional syntax force a strategic decision about data governance: will SEO be managed centrally via rules, granularly via manual data entry, or a hybrid of both? The developer’s job is not just to write the rule but to implement the agreed-upon governance model.

The solution is the Hybrid Pattern, which should be the default for almost every rule you create.

Example Hybrid PDP Title Rule: ${Product.pageTitle ELSE Product.name} | ${Site.displayName}

Let’s break down how the engine processes this:

Product.pageTitle: The platform first checks the product object for a value in thepageTitleattribute. This is the field merchandisers use for manual entry in Business Manager (or hopefully imported from a third-party system).ELSE: If, and only if, thepageTitleattribute is empty or null, the engine proceeds to the expression after theELSEoperator. IfpageTitlehas a value, the rule evaluation stops, and that value is used.

This pattern provides the best of both worlds: automation and scalability for the thousands of products that don’t need special attention, and precise manual control for the high-priority pages that do. Adopting this pattern as a standard practice is the key to a harmonious relationship between development and business teams.

Advanced Strategies and Best Practices

Once you’ve mastered the fundamentals of syntax and inheritance, you can begin to craft mighty rules that go far beyond simple title generation.

Crafting Killer Rules: Practical Examples

The Perfect PDP Title (Hybrid)

Combines the product’s manual title, or falls back to its name, brand, and the site name.

${Product.pageTitle ELSE Product.name AND Constant(' - ') AND Product.brand AND Constant(' | ') AND Site.displayName}

Scenario 1 (Manual pageTitle exists):

Data: Product.pageTitle = “Best Trail Running Shoe for Rocky Terrain”

Generated Output: Best Trail Running Shoe for Rocky Terrain

Scenario 2 (No manual pageTitle, falls back to dynamic pattern):

Data: Product.name = “SummitPro Runner” Product.brand = “Peak Performance” Site.displayName = “GoOutdoors”

Generated Output: SummitPro Runner - Peak Performance | GoOutdoors

The Engaging PLP Description (Hybrid)

Checks for a manual category description, otherwise generates a compelling, dynamic sentence.

${Category.pageDescription ELSE Constant('Shop our wide selection of ') AND Category.displayName AND Constant(' at ') AND Site.displayName AND Constant('. Free shipping on orders over $50!')}

Scenario 1 (Manual pageDescription exists):

Data: Category.pageDescription = “Explore our premium, all-weather tents. Designed for durability and easy setup, perfect for solo hikers or family camping trips.”

Generated Output: Explore our premium, all-weather tents. Designed for durability and easy setup, perfect for solo hikers or family camping trips.

Scenario 2 (No manual pageDescription, falls back to dynamic pattern):

Data: Category.displayName = “Camping Tents” Site.displayName = “GoOutdoors”

Generated Output: Shop our wide selection of Camping Tents at GoOutdoors. Free shipping on orders over $50!

Dynamic OpenGraph Tags

Create separate rules for og:title and og:description using the same hybrid patterns. For og:image, you can access the product’s image URL.

${ProductImageURL.viewType} (Note: The specific viewtype is needed, e.g. large)

Scenario: A user shares a product page on a social platform.

Data: The system has an image assigned to the product in the ‘large’ slot.

Generated Output: https://www.gooutdoors.com/images/products/large/PROD12345_1.jpg

Dynamic OpenGraph Tags

This is a truly advanced use case that demonstrates how rules can implement sophisticated SEO strategy. This rule helps prevent crawl budget waste and duplicate content issues by telling search engines not to index faceted search pages.

${IF SearchRefinement.refinementColor OR SearchPhrase THEN Constant('noindex,nofollow') ELSE Constant('index,follow')}

Scenario 1 (User refines a category by color):

A user is on the “Backpacks” category page and clicks the “Blue” color swatch to filter the results.

Data: SearchRefinement.refinementColor has a value (“Blue”).

Generated Output: noindex,nofollow Result: This filtered page won’t be indexed by Google, saving crawl budget.

Scenario 2 (User performs a site search):

A user types “waterproof socks” into the search bar.

Data: SearchPhrase has a value (“waterproof socks”).

Generated Output: noindex,nofollow Result: The search results page won’t be indexed.

Scenario 3 (User lands on a standard category page):

A user navigates directly to the “Backpacks” category page without any filters.

Data: SearchRefinement.refinementColor is empty AND SearchPhrase is empty.

Generated Output: index,follow Result: The main category page will be indexed by Google as intended.

The Page Designer Conundrum: The Unofficial Unofficial Workaround

Here we encounter a significant limitation: out of the box, the Meta Tag Rules engine does not work with standard Page Designer pages. The underlying Page API object lacks the necessary pageMetaTags. This creates a significant gap for sites that rely on content marketing and campaign landing pages built in Page Designer.

Luckily, an already complete working “workaround” example has been created by David Pereira here.

The Minefield: Warnings, Pitfalls, and Troubleshooting

While powerful, the Meta Tag Rules engine is a minefield of potential “gotchas” that can frustrate developers and cause real business impact if not anticipated.

Warning – The “Accidental Override”: This cannot be overstated. A simple, non-hybrid rule (

${Product.name}) deployed to production can instantly nullify months of careful, manual SEO work by the merchandising team. The Hybrid Pattern (${Product.pageTitle ELSE...}) is your shield. Always use it. This is fundamentally a process failure, not just a technical one, highlighting the need for a clear “contract” between development and business teams about who owns which data.Pitfall – The “30-Minute Wait of Despair”: When you save or assign a rule in Business Manager, it can take up to 30 minutes for the change to appear on the storefront. This is due to platform-level caching. This delay is a classic initiation rite for new SFCC developers who are convinced their rule is broken. The solution is patience: save your rule, then go get a coffee before you start frantically debugging. (Note: I personally have never had to wait this long)

Pitfall – The Empty Attribute Trap: If your rule references an attribute (

Product.custom.seoKeywords) that is empty for a particular product, the engine treats it as a null/false value. This can cause your conditional logic to fall through to anELSEcondition you didn’t expect. This underscores that the effectiveness of your rules is directly dependent on the quality and completeness of your catalog and content data.

Troubleshooting the "Black Box"

You cannot attach the Script Debugger to the rule engine or step through its execution. Troubleshooting is a process of indirect observation.

Step 1: Preview in Business Manager: Your first and best line of defense. The SEO module has a preview function that lets you test a rule against a specific product, category, or content asset ID. This gives you instant feedback on the generated output without affecting the live site.

Step 2: Inspect the Source: The ultimate source of truth is the final rendered HTML. Load the page on your storefront, right-click, and select “View Page Source.” Search for

<title>or<meta name="description">to see exactly what the engine produced.Step 3: The Code-Level Safety Net: As a developer integrating the rules into templates, you have one final check. The

dw.web.PageMetaDataobject, which is populated by the rules, is available in thepdict. You can use the methodisPageMetaTagSet('description')within an<isif>statement in your ISML template. This allows you to render a hardcoded, generic fallback meta tag directly in the template if, for some reason, the rule engine failed to generate one.

The Performance Question: Debunking the Myth

A common concern is that complex nested IF/ELSE rules might slow down page load times, but this is mostly a myth. The real performance issue relates to caching. For cached pages, the impact on performance is nearly nonexistent because the server evaluates the rule only once when generating the page’s HTML during the initial request. This HTML is then stored in the cache. Future visitors receive this pre-rendered static HTML directly from the cache, skipping re-evaluation. The small performance cost only occurs on cache misses. Thus, the focus shouldn’t be on creating overly simple rules but on maintaining a high cache hit rate.

We can be confident that the Salesforce team has developed an effective feature to guarantee optimal performance. Keep in mind the platform cache with a 30-minute delay we previously mentioned. Within that “black box,” a separate system is likely also in place to protect performance.

The Final Verdict: Meta Tag Rules vs. The Alternatives

When deciding how to manage SEO metadata in SFCC, developers face three philosophical choices:

Manual Entry Only (The Control Freak)

Manually populating the

pageTitle,pageDescription, etc., for every item in Business Manager.Pros: Absolute, granular control. Perfect for a small catalog or a handful of critical landing pages.

Cons: Completely unscalable. Highly prone to human error and data gaps. A maintenance and governance nightmare for any site of significant size.

Custom ISML/Controller Logic (The Re-inventor)

Ignoring the rule engine and writing your own logic in controllers and ISML templates to build meta tags.

Pros: Theoretically unlimited flexibility. You can call external services, perform complex calculations, etc..

Cons: You are re-inventing a core platform feature, which introduces significant technical debt. The logic is completely hidden from business users, making it a black box that only developers can manage. It’s harder to maintain and creates upgrade path risks.

Meta Tag Rules (The Pragmatist)

Using the native feature as intended.

Pros: The standard, platform-supported, scalable, and maintainable solution. The logic is transparent and manageable by trained users in Business Manager. It fully supports the hybrid approach, offering the perfect balance of automation and control.

Cons: You are constrained by the built-in DSL. It has known limitations, like the Page Designer issue and syntax, that may require custom workarounds.

What about the PWA Kit?

Yes, you can absolutely continue to leverage the power of Page Meta Tag Rules from the Business Manager in a headless setup. The key is understanding that your headless front end (like a PWA) communicates with the SFCC backend via APIs.

While historically this might have required a development task to extend a standard API or create a new endpoint to expose the dynamically generated meta tag values, this is becoming increasingly unnecessary. Salesforce is actively expanding the Shopper Commerce API (SCAPI), continuously adding new endpoints and enriching existing ones to expose more data directly.

This ongoing expansion, as seen with enhancements to APIs like Shopper Search and Shopper Products, means that the SEO-rich data generated by your rules is more likely to be available out of the box. Instead of building custom solutions, the task for developers is shifting towards simply querying the correct, updated SCAPI endpoint.

This evolution makes it easier than ever to fetch the meta tags for these pages. It validates the headless approach, allowing you to maintain a robust, centralised SEO strategy in the Business Manager while fully embracing the flexibility and performance of a modern front-end architecture.

Conclusion: Go Forth and Automate

Salesforce B2C Commerce Cloud’s Page Meta Tag Rules are far more than a simple configuration screen. They are a strategic tool for building scalable, efficient, and collaborative e-commerce platforms. By mastering the hybrid pattern, understanding the inheritance cascade, knowing how to tackle limitations like the Page Designer gap, and—most importantly—communicating with your business teams, you can transform SEO from a manual chore into an automated powerhouse.

So, the next time that dreaded SEO spreadsheet lands in your inbox, don’t sigh and start writing an importer. Crack open the Page Meta Tag Rules, build some smart, hybrid rules, and go grab a coffee. You’ve just saved your future self hundreds of hours of pain.

You’re welcome.

The post Taming the Beast: A Developer’s Deep Dive into SFCC Meta Tag Rules appeared first on The Rhino Inquisitor.

]]>The post Session Sync Showdown: From plugin_slas to Native Hybrid Auth in SFRA and SiteGenesis appeared first on The Rhino Inquisitor.

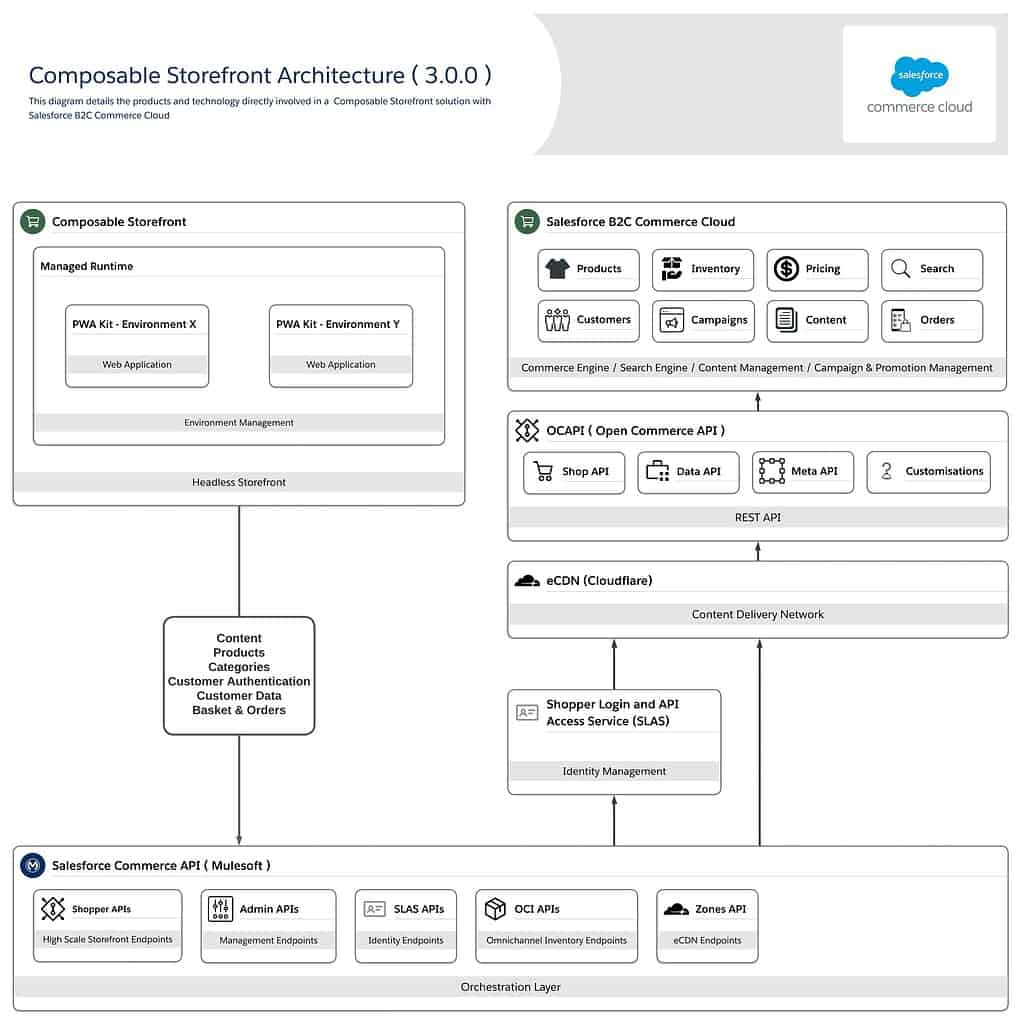

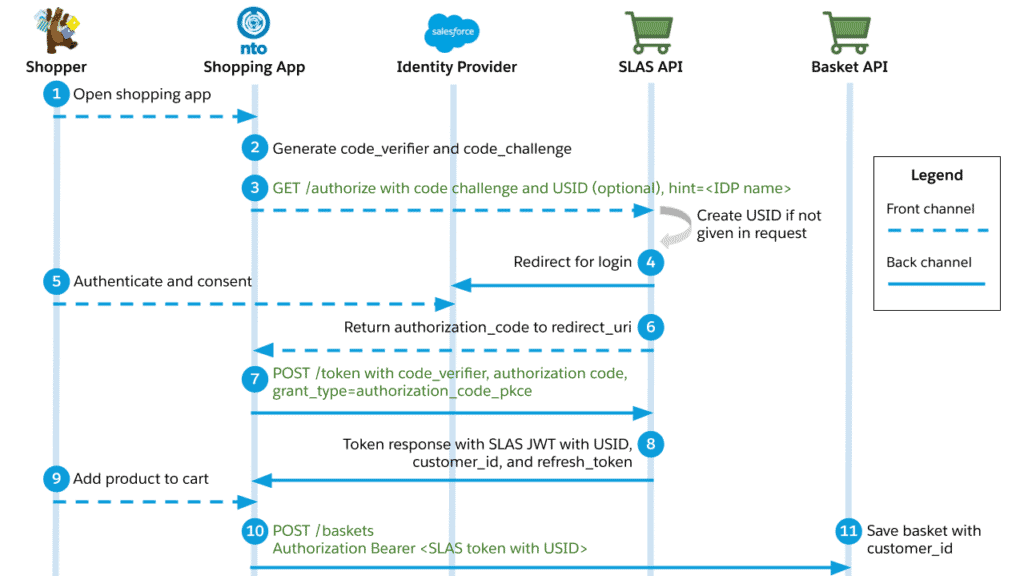

]]>Headless APIs have been available in Salesforce B2C Commerce Cloud for some time, under the “OCAPI (Open Commerce API.).” In 2020, a new set of APIs, known as the SCAPI (Salesforce Commerce API), was introduced.

Within that new set of APIs, a subset was focused on giving developers complete control of the login process of customers, called SLAS (Shopper Login And API Access Service). In February 2022, Salesforce also released a cartridge for SFRA, enabling easy incorporation of SLAS within your current setup.

But let’s cut to the chase. The plugin_slas cartridge (which we will discuss later in the article) was a necessary bridge for its time, but it also introduced performance bottlenecks, API quota concerns, and maintenance headaches.

With the release of native Hybrid Authentication, Salesforce has fundamentally changed the game for hybrid SFRA/Composable storefronts. This guide is your in-depth exploration of the “why” and “how”—we’ll dissect the architectural shift and equip you with the strategic insights you need.

What is SLAS?

But what is SLAS, anywho? It is a set of APIs that allows secure access to Commerce Cloud shopper APIs for headless applications.

Some use-cases:

- Single Sign-On: Allow your customers to use a single set of log-ins across multiple environments (Commerce Cloud vs. a Community Portal)

- Third-Party Identity Providers: Use third-party services that support OpenID like Facebook or Google.

Why use SLAS?

Looking at the above, you might think: “But can’t I already do these things with SFRA and SiteGenesis?”

In a way, you’re right. These login types are already supported in the current system. However, they can’t be used across other applications, such as Endless Aisle, kiosks, or mobile apps, without additional development. You will need to create custom solutions for each case.

SLAS is a headless API that can be used by all your channels, whether they are Commerce Cloud or not.

Longer log-in time

People familiar with Salesforce B2C Commerce Cloud know that the storefront logs you out after 30 minutes of inactivity. Many projects have requested a longer session, especially during checkout, as this can be particularly frustrating.

Previously, extending this timeout wasn’t possible. Now, with SLAS, you can increase it up to 90 days! Yes, you read correctly—a significant three-month extension compared to previous options!

The Old Guard: A Necessary Evil Called plugin_slas

To understand where we’re going, we have to respect where we’ve been. When Salesforce B2C Commerce Cloud began its push into the headless and composable world with the PWA Kit, a significant architectural gap emerged.

The traditional monoliths, Storefront Reference Architecture (SFRA) and SiteGenesis, managed user sessions using a dwsid cookie. The new headless paradigm, however, operates on a completely different authentication mechanism: the Shopper Login and API Access Service (SLAS), which utilises JSON Web Tokens (JWTs).

For any business looking to adopt a hybrid model—keeping parts of their site on SFRA while building new experiences with the PWA Kit—this created a jarring disconnect. How could a shopper’s session possibly persist across these two disparate worlds?

The Problem It Solved: A Bridge Over Troubled Waters

Salesforce’s answer, released in February 2022, was the plugin_slas cartridge. It was designed as a plug-and-play solution for SFRA that intercepted the standard login process. Instead of relying on the traditional dw.system.Session script API calls for authentication, the cartridge rerouted these flows through SLAS. This clever maneuver effectively “bridged” the two authentication systems, allowing a shopper to navigate from a PWA Kit page to an SFRA checkout page without losing their session or their basket.

For its time, the cartridge was a critical enabler. It unlocked the possibility of hybrid deployments and introduced powerful SLAS features to the monolithic SFRA world, such as integration with third-party Identity Providers (IDPs) like Google and Facebook, as well as the much-requested ability to extend shopper login times from a paltry 30 minutes to a substantial 90 days.

The Scars It Left: The True Cost of the Cartridge

While the plugin_slas cartridge solved an immediate and pressing problem, it came at a significant technical cost. Developers on the front lines quickly discovered the operational friction and performance penalties baked into its design.

The Performance Tax: The cartridge introduced three to four remote API calls during login and registration. These weren’t mere internal functions; they involved network-heavy SCAPI and OCAPI calls used for session bridging. This design resulted in noticeable latency during the crucial authentication phase. Every login, registration, and session refresh experienced this delay, impacting user experience.

The API Quota Black Hole: This was perhaps the most challenging issue for development teams, especially when the quota limit was still 8 – this is now 16, luckily. B2C Commerce enforces strict API quotas that cap the number of API calls per storefront request. The plugin_slas cartridge could consume four, and in some registration cases, even five API calls just to log in a user.

Using nearly half of the API limit for authentication alone was a risky strategy. This heavily restricted other vital operations, such as retrieving product information, checking inventory, or applying promotions, all within the same request. It led to constant stress and compelled developers to create complex, fragile workarounds.

The Maintenance Quagmire: As a cartridge,

plugin_slaswas yet another piece of critical code that teams had to install, configure, update, and regression test. When Salesforce released bug fixes or security patches for the cartridge, it required a full deployment cycle to get them into production. This added operational overhead and introduced another potential point of failure in the authentication path, a path that demands maximum stability and security. The cartridge was a tactical patch on a strategic problem, and its very architecture—an external add-on making remote calls back to the platform—was the root cause of its limitations.

The New Sheriff in Town: Platform-Native Hybrid Authentication

Recognising the limitations of the cartridge-based approach, Salesforce went back to the drawing board and engineered a proper, strategic solution. Released with B2C Commerce version 25.3, Hybrid Authentication is not merely an update; it is a fundamental architectural evolution.

What is Hybrid Auth, Really? It's Not Just a Cartridge-ectomy

Hybrid Authentication is best understood as a platform-level session synchronisation engine. It completely replaces the plugin_slas cartridge by moving the entire logic of keeping the SFRA/SiteGenesis dwsid and the headless SLAS JWT is synced directly into the core B2C Commerce platform.

This isn’t a patch or a workaround; it’s a native feature. The complex dance of bridging sessions is no longer the responsibility of a fragile, API-hungry cartridge but is now handled automatically and efficiently by the platform itself.

The Promised Land: Core Benefits of Going Native

For developers and architects, migrating to Hybrid Auth translates into tangible, immediate benefits that directly address the pain points of the past.

Platform-Native Data Synchronisation: The session bridging process is now an intrinsic part of the platform’s authentication flow. This means no more writing, debugging, or maintaining custom session bridging code. It simply works out of the box, managed and maintained by Salesforce.

A Seamless Shopper Experience: By eliminating the clunky, multi-call process of the old cartridge, the platform ensures that session state is synchronised far more reliably and with significantly less latency. The nightmare scenario of a shopper losing their session or basket when moving between a PWA Kit page and an SFRA page is effectively neutralised. This seamlessness extends beyond just the session, automatically synchronising Shopper Context data and “Do Not Track” (DNT) preferences between the two environments.

Full Support for All Templates: Hybrid Authentication is a first-class citizen for both SFRA and, crucially, the older SiteGenesis architecture. This provides a fully supported, productized, and stable path toward a composable future for all B2C Commerce customers, regardless of their current storefront template.

Is The Promised Land Free of Danger?

As with any new feature or solution, early adoption often means less community support initially, and you may encounter unique issues as one of the first partners or customers.

Therefore, it’s essential to review all available documentation and thoroughly test various scenarios in testing environments, such as a sandbox or development environment, before deploying to production.

Hardening Your Security Posture for 2025 and Beyond

The security landscape for web authentication is constantly evolving. The migration to Hybrid Auth presents a perfect opportunity to not only simplify your architecture but also to modernise your security posture and ensure compliance with the latest standards.

The 90-Day Session: A Convenience or a Liability?

While this extended duration is highly convenient for users on trusted personal devices, such as mobile apps, it remains a significant security liability on shared or public computers. If a user authenticates on a library computer, their account and personal data could be exposed for up to three months.

The power to configure this timeout lies within your SLAS client’s token policy. It is strongly recommended that development, security, and legal teams collaborate to define a session duration that strikes an appropriate balance between user convenience and risk. For most web-based storefronts, a much shorter duration, such as 1 to 7 days, is a more prudent and secure choice.

Modern SLAS Security Mandates You Can't Ignore

Since the plugin_slas cartridge was first introduced, Salesforce has rolled out several security enhancements that are now effectively mandatory. Failing to address them during your migration will result in a broken or insecure implementation.

-

Enforcing Refresh Token Rotation: This is a major change, aligning with the OAuth 2.1 security specification. For public clients, which include most PWA Kit storefronts, SLAS now prohibits the reuse of a refresh token. When an application uses a refresh token to get a new access token, the response will contain a new refresh token. The application must store and use this new refresh token for subsequent refreshes. Attempting to reuse an old refresh token will result in a

400 'Invalid Refresh Token'error. Theplugin_slascartridge had to be updated to version 7.4.1 to support this, and any custom headless frontend must be updated to handle this rotation logic. -

Stricter Realm Validation: To enhance security and prevent misconfiguration, SCAPI requests now undergo stricter validation to ensure the realm ID in the request matches the assigned short code for that realm. A mismatch will result in a

404 Not Founderror. -

Choosing the Right Client: Public vs. Private: The fundamental rule of OAuth 2.0 remains paramount. If your application cannot guarantee the confidentiality of a client secret (e.g., a client-side single-page application or a native mobile app), you must use a public client. If the secret can be securely stored on a server (e.g., in a traditional web app or a Backend-for-Frontend architecture), you should use a private client.

Because the migration to Hybrid Auth requires touching authentication code on both the SFCC backend and the headless frontend, it is the ideal and necessary time to conduct a full security audit. The migration project’s scope must include updating your implementation to meet these new, stricter standards.

Conclusion: Be the Rhino, Not the Dodo

Migrating from the plugin_slas cartridge to native Hybrid Authentication is not just a simple version bump or a minor refactor; it is a strategic architectural upgrade. It’s an opportunity to pay down significant technical debt, reclaim lost performance, eliminate API quota anxiety, and dramatically simplify your hybrid architecture.

This shift is a clear signal of Salesforce’s commitment to making the composable and hybrid developer experience more robust, stable, and platform-native. By embracing foundational platform features, such as Hybrid Authentication, over temporary, bolt-on cartridges, you are actively future-proofing your implementation and aligning with the platform’s strategic direction. Don’t let your hybrid architecture become a relic held together by legacy code.

Be the rhino: charge head-first through the complexity and build on the stronger foundation the platform now provides.

The post Session Sync Showdown: From plugin_slas to Native Hybrid Auth in SFRA and SiteGenesis appeared first on The Rhino Inquisitor.

]]>The post B2C Commerce Cloud Introduction: Exploring Features & Tech Stacks appeared first on The Rhino Inquisitor.

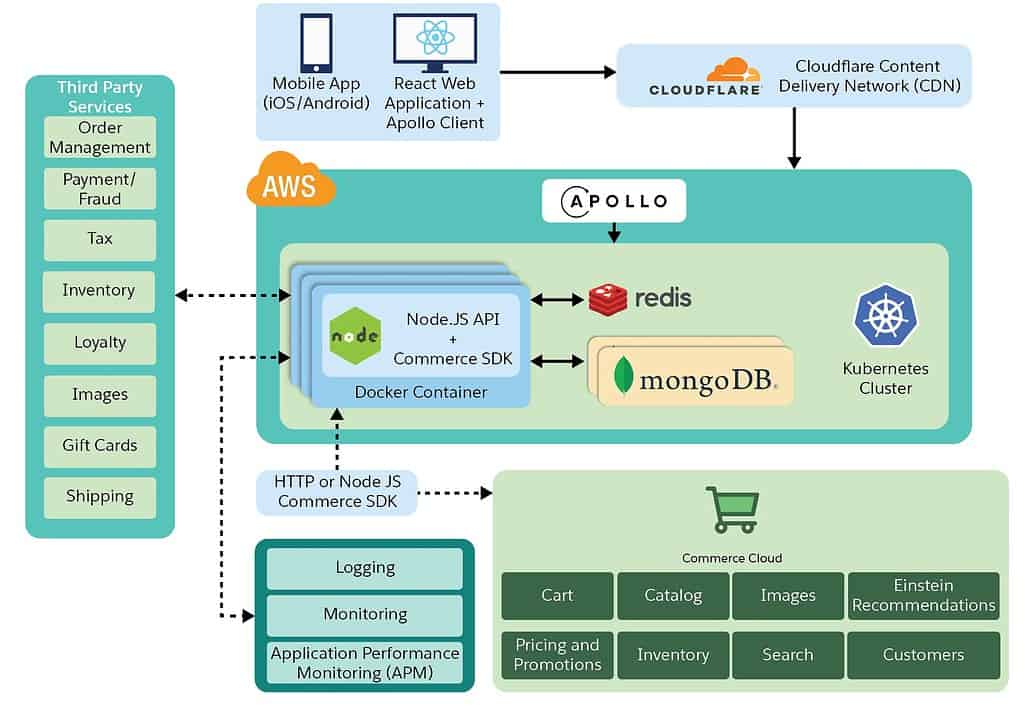

]]>Join me as we delve into the world of B2C Commerce cloud, its history, tech stacks, and the shift towards headless architecture. In this insightful session, learn about the evolution, development languages, APIs, and setting up a composable storefront.

Introduction to B2C Commerce Cloud (Demandware)

Welcome to the world of B2C Commerce Cloud, part of the expansive Salesforce ecosystem. If you’re looking to dive into the intricacies of B2C Commerce Cloud, you’re in the right place. Let’s explore what makes this platform unique, how it fits into the Salesforce family, and understand its various components and historical background.

Evolution from Demandware to B2C Commerce Cloud

The Demandware Genesis

In 2004, Demandware was founded to offer e-commerce solutions with a unique flavour. After twelve years of service, the company was acquired by Salesforce in 2016 and rebranded as B2C Commerce Cloud. This acquisition coincided with a series of strategic integrations by Salesforce and solidified Demandware’s role as a core component of Salesforce Commerce offerings.

The Growth Phase

Over the years, several acquisitions and integrations have significantly enhanced B2C Commerce Cloud’s capabilities.

B2C Commerce cloud versus Commerce on Core

One of the first points of clarification when discussing Commerce Cloud is the differentiation between its various offerings:

- B2C Commerce Cloud: Tailored for business-to-consumer interactions.

- B2B Commerce Cloud: Designed for business-to-business operations, also known as Commerce on Core, emphasising its deep integration with the core Salesforce platform.

- D2C Commerce Cloud: Designed for business-to-consumer interactions, focusing on B2B companies directly selling to consumers.

Managed Packages vs. Cartridges

A unique trait of B2C Commerce Cloud is the absence of managed and unmanaged packages. Instead, developers use cartridges similar to zip files containing all the necessary source code. This differs from the package-based model seen in core Salesforce development.

Role-Based Access and Permissions

While Salesforce’s core platform offers extensive role-based access and permissions options, B2C Commerce Cloud simplifies this by offering module-level access. Users can have read or write access to entire modules, such as products, rather than specific records or fields.

The Development Landscape

B2C Commerce Cloud utilises JavaScript for both backend and frontend development, marking a notable shift from the Apex and Lightning Web Components traditionally used in core Salesforce.

B2C Commerce Cloud Development Evolution

The Early Days: Pipelines

At first, Demandware utilised a pipeline architecture similar to Salesforce flows, adopting a low-code method to establish backend logic. This setup enabled developers to outline page rendering processes using a visual interface.

Transition to Controllers

In subsequent years, pipelines were supplemented and later largely replaced by controllers written in JavaScript. This phase saw Demandware transitioning into more conventional coding methods while retaining some of its original workflow design elements.

Modern Stack: Storefront Reference Architecture and Beyond

The most significant evolution in the platform’s architecture was the introduction of the Mobile-First Reference Architecture (MFRA) and the Storefront Reference Architecture (SFRA). These updates modernised the front end and established a more modular and flexible e-commerce platform.

Headless Commerce: Composable Storefront

In 2021, B2C Commerce Cloud underwent another substantial transformation, embracing the headless commerce model with the acquisition and integration of Mobify. This led to the creation of the PWA Kit and Managed Runtime, now known collectively as the Composable Storefront. This shift aimed to separate frontend and backend development, providing:

- Flexibility: Different teams can work on the frontend and backend simultaneously without conflicting changes.

- Scalability: Easier to scale different parts of the application independently.

- Adaptability: Enables use of the latest frontend technologies like React, ensuring developers leverage modern, well-supported tools and frameworks.

Delving into the Technical Architecture

Understanding the Composable Storefront

The composable storefront represents the latest and most flexible approach to building e-commerce apps on B2C Commerce Cloud. Here’s how it plays out:

- Managed Runtime: Hosts the frontend application, handling deployment and environment management.

- PWA Kit: A React-based frontend framework that communicates with the backend using robust APIs.

API Connectivity: Open Commerce API and Salesforce Commerce API

B2C Commerce Cloud offers two primary API sets:

- Open Commerce API (OCAPI): The original set of APIs dating back to Demandware’s early days.

- Salesforce Commerce API (SCAPI): A newer set designed to provide a more seamless and integrated experience with Salesforce environments, capable of connecting to the environment’s underlying services, like the product database.

Both APIs enable different degrees of interaction between the frontend and backend systems, with SCAPI being pushed as the preferred future standard.

Creating Your Own Composable Storefront

Local Development Setup

To set up a local development environment for the composable storefront:

- Clone the Retail App Example from GitHub. (via npx)

- Install necessary dependencies and configure local settings.

- Run the application locally to start your development.

npx @salesforce/pwa-kit-create-app

npm start

The above commands will set up your project, start the development server, and allow you to make changes to the code, with instant live reloading to see your updates.

Accessing Sandboxes

Getting a sandbox is not easy if you are not a partner or customer.

Conclusion

B2C Commerce Cloud offers a robust, scalable, and customisable solution for modern e-commerce needs. Understanding its history, components, and the evolving development landscape can provide powerful insights for architects, developers, and business owners. Whether you’re just starting or are looking to deepen your expertise, the paths to learning and development within the B2C Commerce Cloud ecosystem are numerous and rewarding. Dive in, explore, and make the most of the invaluable resources available to you.

The post B2C Commerce Cloud Introduction: Exploring Features & Tech Stacks appeared first on The Rhino Inquisitor.

]]>The post What is new in Salesforce Commerce Cloud 24.6? appeared first on The Rhino Inquisitor.

]]>“Connections” is in our rear-view mirror, but some new updates to the platform are ahead! This time, we look at the June 2024 (24.6) release!

Are you interested in last month’s release notes? Click here!

Commerce Concierge has arrived

Offer your shoppers bots that provide multichannel conversational product recommendations and add products to the cart with the new Commerce Concierge for B2C Einstein Bot template. Create an enhanced bot from the template and connect your store to a new Einstein bot. You can also use the new Commerce Concierge bot blocks to add functionality.

AI has been the talk of the year at Salesforce and beyond, and finally, the first fruits of these talks have become available to us. Unfortunately, with all these new features also comes a license that needs to be purchased – Commerce Concierge or Shopper Copilot is no different.

Contact your Salesforce account executive to purchase the Einstein Bots and digital Engagement add-on, and to get the set-up started, head over to this documentation page.

Platform

Venezuela VED and VES Currency Codes Are Supported

Merchants doing business in Venezuela can now use the VED, VES, and VEF currency codes. Previously, only VEF was supported.

Salesforce Commerce Cloud already had quite an extensive list of supported currencies and localisations.

It is good to see that Salesforce is still investing in expanding into more regions.

Get Improved Search Index Performance

B2C Commerce has updated the search index rebuild process. The update decreases resource usage and improves performance, and an unchanged index is no longer published when no changes are detected. Previously, a redundant product update index task was executed following the index rebuild, and a new search index was published when no documents were altered.

When managing multiple sites within a single environment or dealing with a large product catalog, the search index job can take a considerable amount of time to execute.

Any performance improvement to the search index is highly appreciated!

Get Better SEO Search Results

In Business Manager, the Catalog URL rules now use the localizable display value for product attributes with the type Enum of String. The localizable display value improves readability and the SEO value of storefront URLs that use multiple languages. Previously, Product URL attributes used the non-localizable Value.

It’s a bit of a confusing title, but the general idea is that your SEO will improve if you wanted to use localised “Enum of String” values in your URL before – but it didn’t work the way you expected.

With this new update you can optimise your URL structure for SEO with this new attribute support (if it applies).

Business Manager

Create Active Data Sorting Rules

The Search Index Query Testing (SIQT) tool now supports sorting rules with active data sorting attributes. Get consistent sorting results in a storefront and when testing an active data sorting rule. Previously, if a sorting rule with active data was used in SIQT, the sorting used text relevance and didn’t consider active data.

How: To access the SIQT tool, in Business Manager, select Merchant Tools | Search | Search Index Query Testing.

With this new update, we are finally able to correctly test our sorting rules, which makes our lives just a little bit easier when debugging what is going on in the storefront.

OCAPI & SCAPI

New preferences API

The new Preferences API allows you to retrieve Site and Global preferences.

There’s been a continuous addition of new APIs to the SCAPI in recent months. This is great news for everyone working on Headless and PWA Kit projects.

Read all about the new Preferences API here.

Shopper Custom Objects API limit added

Shopper Custom Object API scopes are now limited to 20 entries. This is now enforced in both SLAS API and SLAS Admin UI.

A “quota limit” has been added to the Custom Objects API. Please keep this update in mind if you are close to or past this new platform governance rule.

Error message clarification

SLAS IDP integration has enhanced error handling to return more meaningful error messages to the caller. No change in error code information.

The refined error message: “The Account is disabled” is returned for any user account disabled in B2C Commerce. No change in the error code.

A small update to make debugging life just a little better.

Multiple SLAS sessions for a single USID on a single device

SLAS now allows shoppers to have multiple clients and authenticate on the same device with a single USID, provided the clients use their respective refresh_tokens to refresh their sessions.

This might not be the most common use-case, but does open the door for more complex session related use-cases.

Better SCAPI bundle support

With B2C Commerce version 24.5, the Shopper Baskets API supports patching variations within product bundles in a single call. This enhancement provides:

- More efficient and streamlined product bundle management, making it easier to update multiple variations within a bundle without the need for multiple API calls.

- Increased productivity for developers managing complex product bundles.

Bundle support in the PWA Kit (and headless in general) was not known to provide the best experience. Over the past two years, updates have been made in multiple locations to improve this experience.

Let’s keep em coming!

Order Search Engine Provides Better Performance

The search engine that provides results for the order_search API (OCAPI) has been updated across multiple instances. This update is aimed at enhancing the performance of the search engine. No user impact or behavioral change is expected.

In the last few months, the performance updates have been hitting one after the other, this time for the “Order Search” API.

We’ll take any increase in speed for the APIs, especially one that contains essential data and is often used by third-party integrations.

Development

Service Framework Is Upgraded

B2C Commerce is upgrading the supported SFTP algorithms in the service framework.

The algorithms now include:

- Host Key—ssh-ed25519, ecdsa-sha2-nistp256, ecdsa-sha2-nistp384, ecdsa-sha2-nistp521, rsa-sha2-512, rsa-sha2-256, ssh-rsa, ssh-dss

- Key Exchange (KEX)—curve25519-sha256, [email protected], ecdh-sha2-nistp256, ecdh-sha2-nistp384, ecdh-sha2-nistp521, diffie-hellman-group-exchange-sha256, diffie-hellman-group16-sha512, diffie-hellman-group18-sha512, diffie-hellman-group14-sha256, diffie-hellman-group14-sha1, diffie-hellman-group-exchange-sha1, diffie-hellman-group1-sha1

- Cipher—aes128-ctr, aes192-ctr, aes256-ctr, [email protected], [email protected], aes128-cbc, 3des-ctr, 3des-cbc, blowfish-cbc, aes192-cbc, aes256-cbc

- Message Authentication Code (MAC)—[email protected], [email protected], [email protected], hmac-sha2-256, hmac-sha2-512, hmac-sha1, hmac-md5, hmac-sha1-96, hmac-md5-96

- Public Key Authentication—rsa-sha2-512, rsa-sha2-256, ssh-rsa

It has been a while since any changes were made to the Service Framework. With this update, we now have better support for SFTP algorithms and security options, which is always great to see!

Updated Cartridges & Tools

b2c-tools (v0.25.1)

b2c-tools is a CLI tool and library for data migrations, import/export, scripting and other tasks with SFCC B2C instances and administrative APIs (SCAPI, ODS, etc). It is intended to be complimentary to other tools such as sfcc-ci for development and CI/CD scenarios.

plugin_passwordlesslogin (v2.0.0)

Passwordless login is a way to verify a user's identity without using a password. It offers protection against the most prevalent cyberattacks, such as phishing and brute-force password cracking. Passwordless login systems use authentication methods that are more secure than regular passwords, including magic links, one-time codes, registered devices or tokens, and biometrics.

- brought cartridge up to date so that it works alongside the latest plugin_slas, v7.3.0

- updated login forms and email to include the new 8 digit pin code instead of a direct link for login

- added SCAPI custom API endpoint that handles the auth and email send for composable

The post What is new in Salesforce Commerce Cloud 24.6? appeared first on The Rhino Inquisitor.

]]>The post In the ring: OCAPI versus SCAPI appeared first on The Rhino Inquisitor.

]]>As we move into 2024, the SCAPI has received much attention and has been updated with new APIs, updates, and performance improvements. On the other hand, the OCAPI rarely gets any new features in its release notes, leading some to believe it is outdated or deprecated.

In this article, I will explore this topic in detail to determine whether or not these claims are accurate.

So, let’s get rumbling!

OCAPI versus SCAPI

Salesforce B2C Commerce Cloud has a long-standing history with its OCAPI, which offers a broad range of APIs for various purposes. One typical integration that highlights the functionality of these APIs is Newstore. This mobile application solution uses customisation hooks in the provided cartridge to integrate with the APIs.

The SCAPI, or Storefront Commerce API, is a relatively “new” set of APIs introduced on July 22, 2020. It offers a different way of interacting with SFCC (Salesforce Commerce Cloud) from third-party systems and headless front-ends than the way we had been doing with the OCAPI (Open Commerce API) before.

However, there is one drawback to the SCAPI: not all APIs that exist in the OCAPI are available in the SCAPI, at least not yet.

Let’s keep score, shall we?

OCAPI: 1

SCAPI: 0

New APIs

In recent years, the SCAPI has introduced several new APIs that the OCAPI does not have. These new APIs have been implemented to address OCAPI gaps or expose new functionality, such as those related to SEO and CDN, allowing for more robust and comprehensive functionality.

SCAPI now offers a wide range of APIs for developers to use, allowing them to build customised solutions for their clients. As these new APIs have been developed explicitly for SCAPI, it is unlikely that the OCAPI will ever have access to them.

In the future, it is clear that any significant new APIs will only be added to the SCAPI, which aligns with the platform’s strategy.

OCAPI: 1

SCAPI: 1

SLAS

SLAS, or Shopper Login and API Access Service, is a Salesforce Commerce Cloud (SFCC) feature allowing third-party systems or headless front-ends to authenticate shoppers and make API calls.

It’s an authentication orchestration service that can handle various scenarios without requiring the creation of custom code for each one separately. (Some tweaking of parameters and configuration is still required, but that’s not the focus of this article.):

- B2C Authentication: Normal login with Salesforce B2C Commerce Cloud

- Social Login (Third-party login): Login with platforms such as Google and Facebook

- Passwordless Login: Login via e-mail or SMS

- Trusted Agent: Have a third-party person or system login on behalf of a customer

Although it is possible to use this service in conjunction with OCAPI, it is more part of the SCAPI offering, so let us give a point to SCAPI in this case.

OCAPI: 1

SCAPI: 2

PWA Kit

Have you heard about the PWA Kit or Composable Storefront? You may have, as it’s the latest addition to the front-end options besides SiteGenesis and SFRA.

The Composable Storefront is a Headless storefront that connects to the back-end SFCC systems through the SCAPI. Although it used to be connected to the OCAPI due to some limitations with the hooks system, the latest version is now fully connected to the SCAPI.

It’s no secret that the Composable Storefront is the primary driver for these innovations.

Another point to SCAPI!

OCAPI: 1

SCAPI: 3

Oh my … things aren’t looking proper for the OCAPI.

Infrastructure

The architectural setups of the OCAPI and SCAPI options are entirely different.

The OCAPI runs on the back end, the exact location as the Business Manager, SFRA/SG storefront, and your custom code.

On the other hand, the SCAPI is a MuleSoft instance managed by Salesforce (no, you can’t access this – but I know you want to). In the current architecture, CloudFlare workers have taken over the role that was previously played by MuleSoft.

Although the SCAPI has an extra layer in between, it gives Salesforce the flexibility to make their architecture more flexible (and composable) by allowing them to have one point of entry while being able to upgrade, fix, or replace parts without anyone noticing.However, this setup has some downsides, such as more network hops between the systems, resulting in network delays that need to be considered. By replacing MuleSoft with CloudFlare, the amount of network delays introduced should be minimal!

The OCAPI wins for its simplicity, but the SCAPI wins for its future-proof architecture. Nevertheless, this future-proof architecture can only work if it has been set up correctly, and we don’t have any view into that black box.

So, for me, both of them get a point here!

OCAPI: 2

SCAPI: 4

Rate Limits

APIs can be enjoyable to work with, but they are also vulnerable to DDoS attacks and poor design, leading to excessive API calls and a heavy server load. Yet, the OCAPI is designed to be safe and user-friendly, and CloudFlare and Salesforce-managed firewalls protect it to ensure server safety and limit the number of requests.

Although the rate-limiter is a straightforward “pass” or “block” method, it is essential to consider its impact and be prepared for the worst.

The SCAPI has implemented a new “Load Shedding” system to replace rate limits. This system provides a comprehensive view of what is happening behind the scenes.

OCAPI: 2

SCAPI: 5

Conclusion

The SCAPI outperforms the OCAPI in multiple ways, which is why the former was implemented. However, if you are still extensively using the OCAPI, there is no need to worry because you are not alone – even the SCAPI uses it behind the scenes.

Many SCAPI API calls are just a proxy for OCAPI calls. Consequently, as long as the SCAPI depends on the OCAPI, it is not going anywhere.

The post In the ring: OCAPI versus SCAPI appeared first on The Rhino Inquisitor.

]]>The post Unravelling the mystery of dates in the OCAPI appeared first on The Rhino Inquisitor.

]]>When we integrate third-party systems with Salesforce B2C Commerce Cloud using OCAPI or SCAPI, we often have the requirement to filter data based on date ranges or only retrieve data that has been modified after a certain time.

But how can we achieve this? Are there any other options available? Let’s explore the various filtering and query options in detail.

Querying

Not all endpoints are alike, but within the OCAPI the way of searching for different objects remains the same: making use of Queries and Filtering options.

Here are some of the example endpoints:

Attributes

Make sure to check the documentation pages for the specific endpoint to view the supported attributes before building your query.

Date Format

When crafting these date filters, adherence to the ISO 8601 date format (YYYY-MM-DDTHH:MM:SS.mmmZ) is essential for the API to parse the values correctly. Additionally, ensure that the field names, like creation_date, valid_from, valid_to, and others, correspond to your Salesforce Commerce Cloud data model’s actual date-related fields.

2012-03-19T07:22:59Z // example

Range Filter

If you need to find records that fall within a specific date interval, the range_filter is your go-to option. This filter can find records with a date value sitting between a specified start (from) and end (to) date.

{

"query": {

"filtered_query": {

"filter": {

"range_filter": {

"field": "creation_date",

"from": "2020-03-08T00:00:00.000Z",

"to": "2020-03-10T00:00:00.000Z"

}

},

"query": {

"match_all_query": {}

}

}

}

}

Range2 Filter

To deal with scenarios where you have two date fields and want to filter records with an overlapping date range, use the range2_filter. This allows the specification of a date range that overlaps the range between the two fields you are considering.

A Range2Filter allows you to restrict search results to hits where the first range (R1), defined by a pair of attributes (e.g., valid_from and valid_to), has a specific relationship to a second range (R2), defined by two values (from_value and to_value). The relationship between the two ranges is determined by the filter_mode, which can be one of the following:

overlap:R1overlaps fully or partially withR2containing:R1containsR2contained:R1is contained inR2

"query" : {

"filtered_query": {

"filter": {

"range2_filter": {

"from_field": "valid_from",

"to_field": "valid_to",

"filter_mode":"overlap",

"from_value": "2007-01-01T00:00:00.000Z",

"to_value": "2017-01-01T00:00:00.000Z"

}

},

"query": { "match_all_query": {} }

}

}

Bool Filter

Sometimes, the need for complexity arises when constructing date-based queries. The bool_filter permits the combination of numerous filters for complex logical expressions. This filter is specifically helpful for creating compound date queries that may, for instance, combine status checks with date ranges.

{

"query": {

"filtered_query": {

"query": {

"match_all_query": {}

},

"filter": {

"bool_filter": {

"operator": "and",

"filters": [

{

"term_filter": {

"field": "status",

"operator": "is",

"values": ["open"]

}

},

{

"range_filter": {

"field": "creation_date",

"from": "2023-01-01T00:00:00.000Z"

}

}

]

}

}

}

}

}

Term Query

For precision filtering, where a field must match an exact date, the term_query becomes the instrument of choice. This query matches records based on absolute equality with the specified date.

{

"query": {

"term_query": {

"fields": ["creation_date"],

"operator": "is",

"values": ["2023-04-01T00:00:00.000Z"]

}

}

}

Custom Endpoint

It is currently not fully available / BETA, but you can create custom GET endpoints tailored entirely to your requirements.

When creating these endpoints, it’s important to consider performance and caching – these are your responsibility when utilising this option.

Conclusion

The search API capabilities of OCAPI in Salesforce B2C Commerce Cloud offer robust and flexible options for date-related searches.

You can customize your searches using range_filter, range2_filter, bool_filter, and term_query as per your requirements. It is important to use the correct date format and field names to make the most out of these tools. These data querying capabilities can help you segment promotional data, manage catalog validity, or filter orders based on dates, making your commerce data handling more streamlined.

Happy coding!

The post Unravelling the mystery of dates in the OCAPI appeared first on The Rhino Inquisitor.

]]>The post New APIs and Features for a Headless B2C Commerce Cloud appeared first on The Rhino Inquisitor.

]]>The holiday period was quiet for a long time regarding Salesforce B2C Commerce Cloud releases. This was because the monolithic system required the deployment of all components, which carried the risk of bugs.

However, more options are now available with multiple “layers” in the Headless architecture. Each layer can have its release schedule, and some layers are more modular than others, allowing for finer-grained releases that will not impact the rest (at least in theory).

Major new releases are happening for the first time during the holiday season. And it’s pretty exciting!

Managed Runtime

Storefront Preview

The “Shop the Future” feature seems to have been on the Wishlist since the release of the PWA Kit. This feature was highly sought-after by merchandisers as it allowed them to set up promotions and content in advance and see how they would appear on the site.

However, it can be challenging to implement this feature without the right APIs in a Headless or Composable Commerce setup. Fortunately, this challenge can be overcome with the “Shop the Future” and “Shopper Context” options provided by the SCAPI.

This is a big win for any project already on or going to the Composable Storefront!

Changes for the future

Headless and Composable architectures bring great flexibility for the future but pose particular challenges in monitoring and analytics. One of the significant challenges is consolidating data from multiple entities.

Salesforce has now made it mandatory to specify the back-end you connect from your storefront. This will eliminate guesswork and ensure correct logging is sent to the Log Center linked to the respective environment.

Automatically forwarding logs to the Log Center offers multiple benefits to Salesforce support and users of the Composable Storefront. With this feature, logs for HTTP requests made on production-marked environments are automatically sent, enabling them to anticipate better and troubleshoot any issues that may arise.

PWA Kit v3.2.1

With the changes happening in the managed runtime, two new releases have happened in the past month of the PWA Kit:

OCAPI & SCAPI

Shopper SEO - Headless URL Resolution

There is a new API in town. SiteGenesis and SFRA have had the ability forever to resolve any type of URL that you configured in the Business manager, and automatically redirect the user to the right place!

But for the PWA Kit or Headless projects, that piece of magic was always missing – until now!

Meet the new URL Resolution API!

I believe that in the future, we will witness endpoints to address some of the gaps that exist compared to SFRA. I have also learned that an upcoming feature will enable direct resolution to the SCAPI payload rather than just the relative ObjectID.

Stores

If you remember, the SCAPI release included a “Shopper Stores” group that allowed developers to build their own Store Locator in Headless scenarios.

Unfortunately, this feature was short-lived and disappeared soon after. However, with the Stores API now back in action, developers can again use the “Shopper Stores” group to create custom store locators that meet their specific needs.

This significant development will be welcomed by those eagerly waiting for this functionality to return. I only question how quickly this will make it into the PWA Kit priority-wise. Maybe this could become one of the more extensive community contributions. Who knows?

More on the horizon?

I received some exciting news about new APIs that Salesforce is currently developing. A custom object API and site preferences API are in active development and are expected to be released in the next cycle.

What APIs would you like to see? Maybe it is … (slowly walks over to the next section)

Time for Feedback

Have you ever felt helpless when you couldn’t provide feedback on a product? Worry no more!

Salesforce is looking for your valuable feedback on their Composable Storefront. They want to hear your honest opinion – the good, the bad, and everything in between. Your feedback will help them improve their product and make it even better.

What are you waiting for? Share your thoughts and help Salesforce build a product that meets your needs!

The post New APIs and Features for a Headless B2C Commerce Cloud appeared first on The Rhino Inquisitor.

]]>The post Effortlessly Create External Orders in Salesforce Commerce Cloud appeared first on The Rhino Inquisitor.

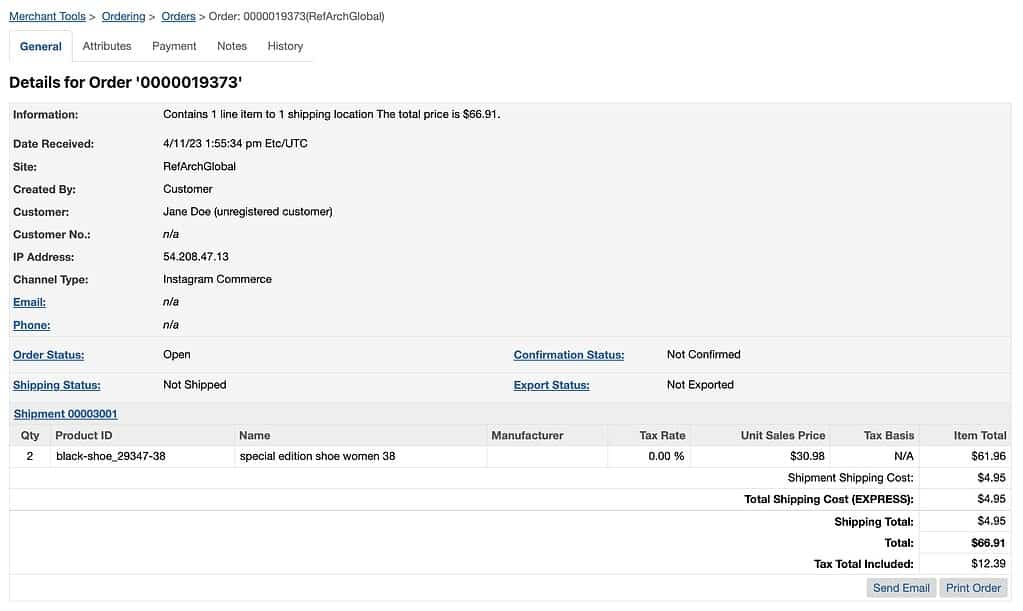

]]>In this article, we will discuss the createOrders API used to create orders in the Commerce Cloud platform. The createOrders API is designed to create a fully calculated, paid, or authorised order on the fly in the Commerce Cloud platform. But how does it work?

When to use the createOrders API

With this API, creating an order is a breeze as it eliminates the need to go through the various steps required to create a basket, including authorisation, guest information, managing addresses, payment, and inventory management.

One of the most common scenarios where this API comes in handy is selling on social channels and offering in-app purchases. The API enables you to send the entire order to be shipped without going through the usual steps. A third-party provider managing recurring orders can use this API to streamline their processes.

The potential use cases for this API are numerous and diverse, and it’s a valuable tool for developers and architects!

Things to keep in mind

If you plan to use this API, know it relies on the origin system that creates the order. This means you must ensure that all the necessary checks and verifications are done in the third-party system before transferring the order into B2C Commerce Cloud.

So, what kind of checks are we talking about? Well, a few things need to be verified before the order can be processed. For example, the address to which the order is being shipped must be validated and corrected if there are any errors. You wouldn’t want the package to end up at the wrong address.

Another thing that needs to be verified is the payment information. This is important to ensure the transaction is legitimate and the customer can pay for the order. Nobody wants to deal with fraudulent transactions; verifying the payment information is one way to prevent that.

Some technical things must be handled – For instance, the order price needs to be calculated accurately. And, if there’s limited inventory for the item being ordered, the stock must be reserved and released promptly.

These checks and verifications are essential to ensure that the order is processed correctly and that the customer is satisfied with their purchase. Selling products on a third-party channel is one thing, but you don’t want them to get stuck in the process!

Implementation

Getting a SLAS API key

We can’t have just anyone pushing orders in our system, do we? So the first step is to create an API key in SLAS!

In this case, we will be creating a private client. Using a visual UI to make things easier is possible, which I explain in a different post.

There are a few key differences:

- Which App Type will be used?: “BFF or Web App”.

Choosing this option will create a “private” client.

- Do you want the default shopper scopes?: Unchecked

- Enter custom shopper scopes: sfcc.orders.rw sfcc.ts_ext_on_behalf_of

Authenticating

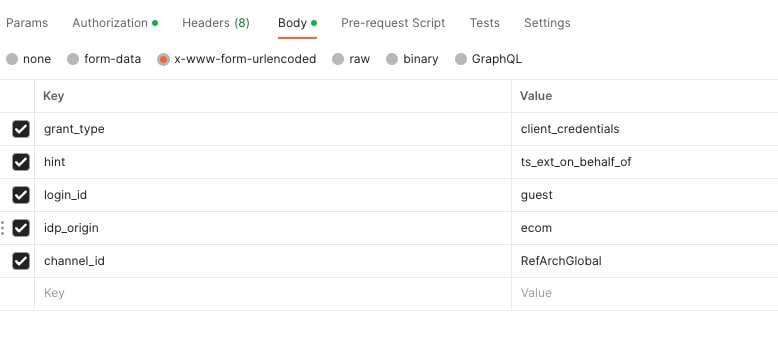

Before we can start pushing in orders for anonymous or registered users, we need to get a Trusted System Access Token. With this token, we will allow our third-party system to act on behalf of a guest or registered user without knowing their credentials.

URL: https://{shortCode}.api.commercecloud.salesforce.com/shopper/auth/v1/organizations/{organizationId}/oauth2/trusted-system/token

Authorisation: Basic Authentication

Authorisation Format: {SLAS Client}:{SLAS Secret}

Body: application/x-www-form-urlencoded

// guest

grant_type:client_credentials

hint:ts_ext_on_behalf_of

login_id:guest

idp_origin:ecom

channel_id:RefArchGlobal

Within this body, we are authenticating a guest customer via “ecom” (B2C Commerce Cloud) for our site “RefArchGlobal“.

When submitting your request, the following response should pop out!

{

"access_token": "JWT TOKEN",

"id_token": "",

"refresh_token": "A-dhgTIz-WCGVDrt5OwVb4lWD3f2-KmkCAI7e4",

"expires_in": 1800,

"token_type": "BEARER",

"usid": "0884d762-9f45-46e2-812a-2f9cd1d89",

"customer_id": "abkbE2leo1lHgRmuwYlqlKsW",

"enc_user_id": "",

"idp_access_token": null

}

And with our access_token we can continue our journey and push an order into Commerce Cloud!

Creating an order

Almost there! Now we have everything to start creating our order (except the order itself). We need to do a second API call using the bearer token we generated in the previous step, linking us to that specific customer.

URL: https://{shortCode}.api.commercecloud.salesforce.com/checkout/orders/v1/organizations/{organizationId}/orders

Authorisation: Bearer token

Body:

{

"billingAddress": {

"address1": "43 Main Rd.",

"city": "Burlington",

"firstName": "Jane",

"lastName": "Doe"

},

"channelType": "instagramcommerce",

"currency": "USD",

"orderNo": "0000019373",

"orderTotal": 66.91,

"taxTotal": 12.39,

"paymentInstruments": [

{

"paymentMethodId": "PAYPAL",

"paymentTransaction": {

"amount": 66.91,

"transactionId": "abc13384ajsgdk1"

}

}

],

"productItems": [

{

"basePrice": 30.98,

"grossPrice": 61.96,

"netPrice": 49.57,

"productId": "black-shoe_29347-38",

"productName": "special edition shoe women 38",

"quantity": 2,

"shipmentId": "shipment1",

"tax": 12.39

}

],

"shipments": [

{

"shipmentId": "shipment1",

"shippingAddress": {

"address1": "43 Main Rd.",

"city": "Burlington",

"firstName": "Jane",

"lastName": "Doe"

},

"shippingMethod": "EXPRESS",

"shippingTotal": 4.95,

"taxTotal": 0

}

]

}

After submitting this request we should get an empty response with status 201 (CREATED).

And with that, our order is visible in the Business Manager!

GMV

The method outlined above impacts the GMV, which can lead to higher licensing costs. But don’t worry: that’s where negotiation with Salesforce comes in.

The post Effortlessly Create External Orders in Salesforce Commerce Cloud appeared first on The Rhino Inquisitor.

]]>The post The Salesforce B2C Commerce Cloud URL: Cracking the Code appeared first on The Rhino Inquisitor.

]]>It should be no secret that a URL is a vital part of any website In this article, we will dissect and explain the different parts of a Salesforce B2C Commerce Cloud URL and provide code examples on how to access this information in an SFCC controller and React using the useLocation() hook.

The URL Structure

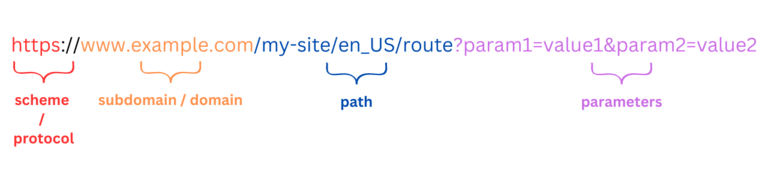

A typical Salesforce B2C Commerce Cloud URL consists of the following components:

1. Protocol (https only)

2. Domain & Subdomain (your site’s domain)

3. Path (Including the Pipeline Name in non-SEO URLs)

5. Query Parameters (optional, used to pass additional data to a server-side controller or React component)

Example URLs:

https://www.example.com/on/demandware.store/Sites-MySite-Site/en_US/MyPipeline-MyAction?param1=value1¶m2=value2

https://www.example.com/my-site/en_US/route?param1=value1¶m2=value2

Protocol

The protocol is the foundation of how data is transmitted across the internet. In a Salesforce B2C Commerce Cloud URL, the protocol is HTTP (Hypertext Transfer Protocol) or HTTPS (Hypertext Transfer Protocol Secure). HTTP is the standard protocol for transmitting data between a web server and a browser, while HTTPS is a more secure version that uses encryption to protect the data being sent.

Salesforce B2C Commerce Cloud only allows HTTPS and has blocked the use of HTTP for quite a few years now. When working locally with the PWA Kit, HTTP is used, however.

// SFRA - SiteGenesis

request.getHttpProtocol();

// PWA KIT Client Side

window.location.protocol

// PWA Kit Server Side (getProps)

req.protocol

Domain & subdomain

// SFRA - SiteGenesis

request.getHttpHost();

// PWA KIT Client Side

window.location.hostname

// PWA Kit Server Side (getProps)

req.hostname

The domain is the unique address of your website on the internet. It is the identifier that users will type into their browser to access your site, and search engines also use it to index.

In a Salesforce B2C Commerce Cloud URL, the domain represents your site’s custom domain or a subdomain provided by Salesforce.

Choosing a domain that is easy to remember and represents your brand is crucial, as it plays a vital role in your site’s visibility and credibility.

Path

// SFRA - SiteGenesis

request.getHttpPath();

// PWA KIT

import {useLocation} from 'react-router-dom'

const { pathname } = useLocation()

The “path” of a URL refers to the hierarchical structure of a website that designates the specific location of a resource, such as a web page, an image, or a file, on the web server. The path helps users and search engines navigate and understand the organisation of a website’s content. It is an essential part of the URL, following the domain name and starting with a forward slash (/).

A URL path is typically organised into multiple segments, separated by forward slashes. Each segment represents a level in the site’s hierarchical structure. The leftmost segment is the highest level, and the following segments represent subdirectories or resources within the higher-level directories.

For example, in the URL “https://www.example.com/blog/2021/post-title”, the path is “/blog/2021/post-title”. This path indicates that the specific web page is located within the “2021” subdirectory of the “blog” directory on the server.

A well-structured and descriptive URL path can improve a website’s search engine optimization (SEO) and user experience (UX) by making it easier for users and search engines to understand the relationship between different pages and resources on the site.

Query Parameters

// SFRA - SiteGenesis

request.getHttpParameterMap();

request.getHttpParameters();

request.getHttpQueryString();

// PWA KIT

import {useLocation} from 'react-router-dom'

const { search } = useLocation()

Not rocket science

Fortunately, obtaining this information is not rocket science, and it can be easily accessed. But having a cheat sheet can be quite helpful, don’t you think?

The post The Salesforce B2C Commerce Cloud URL: Cracking the Code appeared first on The Rhino Inquisitor.

]]>The post What is the Salesforce B2C Commerce Cloud Managed Runtime? appeared first on The Rhino Inquisitor.

]]>In the last two years, more vocabulary has been added to the Salesforce B2C Commerce Cloud ecosystem because of the Composable Storefront.

And one of the terms you might have heard is “Managed Runtime“, which provides the infrastructure to deploy, host, and monitor your Progressive Web App (PWA) Kit storefront. In this article, we will explore the inner workings of Managed Runtime, its benefits, and how it empowers developers to build storefronts without having to think (much) about the underlying infrastructure.

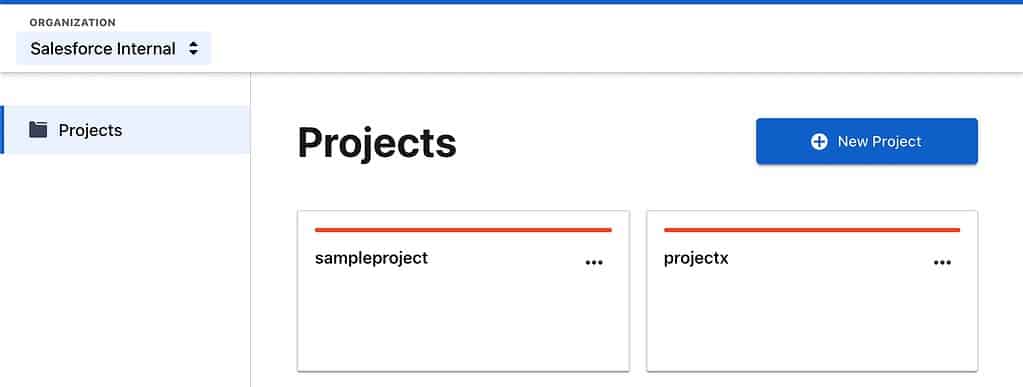

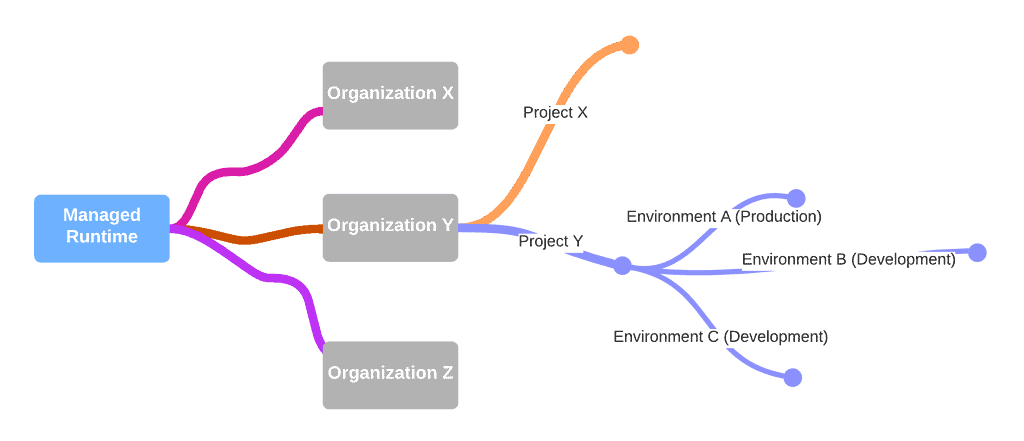

What does the Managed Runtime do?

The Managed Runtime is designed to support applications created from a PWA Kit template in the PWA Kit repository on GitHub. It operates within environments, which is the term to describe all of the cloud infrastructure and configuration values. It is possible within that infrastructure to differentiate between development and production environments.

Developers will use the PWA Kit tools to generate a bundle, a snapshot of the storefront code at a specific time, and push it to Managed Runtime. Once the bundle is pushed, it is possible to use the Runtime Admin Web Interface or Managed Runtime API to designate that bundle as “deployed.”

Each project can have multiple bundles, but each environment has only one “deployed” bundle. Similar to the fact that you can only have one active “Code Version” on the server side.

The Managed Runtime operates within a hierarchy of organisations and projects. Organisations can contain multiple projects for various storefronts, and each project can contain multiple environments.

This structure allows for efficiently managing multiple environments and separating different work streams.

AWS Lambda

Under the hood, the Managed Runtime uses AWS Lambda, a serverless computing service provided by Amazon Web Services (AWS) that allows you to run your code without provisioning or managing servers.

Curious about Lambda? Here you go!

Benefits of the Managed Runtime for businesses

When a platform offers features, there are a lot of questions that will go through your mind. And one of them will probably be, “What benefits does it bring?”:

- Included in the license: It is not a phrase you often see in the Salesforce Eco-system, but the managed runtime is ready to be taken advantage of if you have a license for B2C Commerce Cloud!

- Simplified deployment: Managed Runtime streamlines deploying and hosting your PWA Kit storefront, meaning that developers only need to develop – and not worry about the infrastructure. And that saves time and, ultimately, money.

- Scalability: Nothing new to B2C Commerce Cloud, but the infrastructure provided by Managed Runtime allows your storefront to scale seamlessly as your business grows, ensuring optimal performance and customer experience just like the “monolithic solution“.

- Security: Managed Runtime offers robust security features that protect your storefront from potential threats and vulnerabilities like the back end.

- Improved troubleshooting: The immutable nature of bundles allows for a complete and accurate history of deployments, making it easier to identify and resolve issues or even do a quick rollback to a previous version!

Benefits of the Managed Runtime for developers

Developers working with Salesforce B2C Commerce Cloud can leverage this runtime to:

- Accelerate development: This was already mentioned, but the fact that, as a developer, you do not have to worry about the infrastructure is a significant benefit!